Compare commits

1 Commits

master

...

revert-53-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

566a5658b9 |

1

.gitattributes

vendored

@ -1 +0,0 @@

|

|||||||

*.py text=auto eol=lf

|

|

||||||

1

.gitignore

vendored

@ -1,4 +1,3 @@

|

|||||||

*.DS_Store

|

|

||||||

# Byte-compiled / optimized / DLL files

|

# Byte-compiled / optimized / DLL files

|

||||||

__pycache__/

|

__pycache__/

|

||||||

*.py[cod]

|

*.py[cod]

|

||||||

|

|||||||

2

.idea/.gitignore

generated

vendored

@ -1,2 +0,0 @@

|

|||||||

# Default ignored files

|

|

||||||

/workspace.xml

|

|

||||||

8

.idea/AV_Data_Capture.iml

generated

@ -1,8 +0,0 @@

|

|||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<module type="PYTHON_MODULE" version="4">

|

|

||||||

<component name="NewModuleRootManager">

|

|

||||||

<content url="file://$MODULE_DIR$" />

|

|

||||||

<orderEntry type="jdk" jdkName="Python 3.8 (AV_Data_Capture)" jdkType="Python SDK" />

|

|

||||||

<orderEntry type="sourceFolder" forTests="false" />

|

|

||||||

</component>

|

|

||||||

</module>

|

|

||||||

19

.idea/dictionaries/tanpengsccd.xml

generated

@ -1,19 +0,0 @@

|

|||||||

<component name="ProjectDictionaryState">

|

|

||||||

<dictionary name="tanpengsccd">

|

|

||||||

<words>

|

|

||||||

<w>avsox</w>

|

|

||||||

<w>emby</w>

|

|

||||||

<w>fanart</w>

|

|

||||||

<w>fanza</w>

|

|

||||||

<w>javbus</w>

|

|

||||||

<w>javdb</w>

|

|

||||||

<w>jellyfin</w>

|

|

||||||

<w>khtml</w>

|

|

||||||

<w>kodi</w>

|

|

||||||

<w>mgstage</w>

|

|

||||||

<w>plex</w>

|

|

||||||

<w>pondo</w>

|

|

||||||

<w>rmvb</w>

|

|

||||||

</words>

|

|

||||||

</dictionary>

|

|

||||||

</component>

|

|

||||||

6

.idea/inspectionProfiles/profiles_settings.xml

generated

@ -1,6 +0,0 @@

|

|||||||

<component name="InspectionProjectProfileManager">

|

|

||||||

<settings>

|

|

||||||

<option name="USE_PROJECT_PROFILE" value="false" />

|

|

||||||

<version value="1.0" />

|

|

||||||

</settings>

|

|

||||||

</component>

|

|

||||||

7

.idea/misc.xml

generated

@ -1,7 +0,0 @@

|

|||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<project version="4">

|

|

||||||

<component name="JavaScriptSettings">

|

|

||||||

<option name="languageLevel" value="ES6" />

|

|

||||||

</component>

|

|

||||||

<component name="ProjectRootManager" version="2" project-jdk-name="Python 3.8 (AV_Data_Capture)" project-jdk-type="Python SDK" />

|

|

||||||

</project>

|

|

||||||

8

.idea/modules.xml

generated

@ -1,8 +0,0 @@

|

|||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<project version="4">

|

|

||||||

<component name="ProjectModuleManager">

|

|

||||||

<modules>

|

|

||||||

<module fileurl="file://$PROJECT_DIR$/.idea/AV_Data_Capture.iml" filepath="$PROJECT_DIR$/.idea/AV_Data_Capture.iml" />

|

|

||||||

</modules>

|

|

||||||

</component>

|

|

||||||

</project>

|

|

||||||

6

.idea/other.xml

generated

@ -1,6 +0,0 @@

|

|||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<project version="4">

|

|

||||||

<component name="PySciProjectComponent">

|

|

||||||

<option name="PY_SCI_VIEW_SUGGESTED" value="true" />

|

|

||||||

</component>

|

|

||||||

</project>

|

|

||||||

6

.idea/vcs.xml

generated

@ -1,6 +0,0 @@

|

|||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<project version="4">

|

|

||||||

<component name="VcsDirectoryMappings">

|

|

||||||

<mapping directory="$PROJECT_DIR$" vcs="Git" />

|

|

||||||

</component>

|

|

||||||

</project>

|

|

||||||

224

ADC_function.py

@ -1,127 +1,97 @@

|

|||||||

#!/usr/bin/env python3

|

#!/usr/bin/env python3

|

||||||

# -*- coding: utf-8 -*-

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

import requests

|

import requests

|

||||||

from configparser import ConfigParser

|

from configparser import ConfigParser

|

||||||

import os

|

import os

|

||||||

import re

|

import re

|

||||||

import time

|

import time

|

||||||

import sys

|

import sys

|

||||||

from lxml import etree

|

|

||||||

import sys

|

config_file='config.ini'

|

||||||

import io

|

config = ConfigParser()

|

||||||

from ConfigApp import ConfigApp

|

|

||||||

# sys.stdout = io.TextIOWrapper(sys.stdout.buffer, errors = 'replace', line_buffering = True)

|

if os.path.exists(config_file):

|

||||||

# sys.setdefaultencoding('utf-8')

|

try:

|

||||||

|

config.read(config_file, encoding='UTF-8')

|

||||||

# config_file='config.ini'

|

except:

|

||||||

# config = ConfigParser()

|

print('[-]Config.ini read failed! Please use the offical file!')

|

||||||

|

else:

|

||||||

# if os.path.exists(config_file):

|

print('[+]config.ini: not found, creating...')

|

||||||

# try:

|

with open("config.ini", "wt", encoding='UTF-8') as code:

|

||||||

# config.read(config_file, encoding='UTF-8')

|

print("[proxy]",file=code)

|

||||||

# except:

|

print("proxy=127.0.0.1:1080",file=code)

|

||||||

# print('[-]Config.ini read failed! Please use the offical file!')

|

print("timeout=10", file=code)

|

||||||

# else:

|

print("retry=3", file=code)

|

||||||

# print('[+]config.ini: not found, creating...',end='')

|

print("", file=code)

|

||||||

# with open("config.ini", "wt", encoding='UTF-8') as code:

|

print("[Name_Rule]", file=code)

|

||||||

# print("[common]", file=code)

|

print("location_rule='JAV_output/'+actor+'/'+number",file=code)

|

||||||

# print("main_mode = 1", file=code)

|

print("naming_rule=number+'-'+title",file=code)

|

||||||

# print("failed_output_folder = failed", file=code)

|

print("", file=code)

|

||||||

# print("success_output_folder = JAV_output", file=code)

|

print("[update]",file=code)

|

||||||

# print("", file=code)

|

print("update_check=1",file=code)

|

||||||

# print("[proxy]",file=code)

|

print("", file=code)

|

||||||

# print("proxy=127.0.0.1:1081",file=code)

|

print("[media]", file=code)

|

||||||

# print("timeout=10", file=code)

|

print("media_warehouse=emby", file=code)

|

||||||

# print("retry=3", file=code)

|

print("#emby or plex", file=code)

|

||||||

# print("", file=code)

|

print("#plex only test!", file=code)

|

||||||

# print("[Name_Rule]", file=code)

|

print("", file=code)

|

||||||

# print("location_rule=actor+'/'+number",file=code)

|

print("[directory_capture]", file=code)

|

||||||

# print("naming_rule=number+'-'+title",file=code)

|

print("switch=0", file=code)

|

||||||

# print("", file=code)

|

print("directory=", file=code)

|

||||||

# print("[update]",file=code)

|

print("", file=code)

|

||||||

# print("update_check=1",file=code)

|

print("everyone switch:1=on, 0=off", file=code)

|

||||||

# print("", file=code)

|

time.sleep(2)

|

||||||

# print("[media]", file=code)

|

print('[+]config.ini: created!')

|

||||||

# print("media_warehouse=emby", file=code)

|

try:

|

||||||

# print("#emby plex kodi", file=code)

|

config.read(config_file, encoding='UTF-8')

|

||||||

# print("", file=code)

|

except:

|

||||||

# print("[escape]", file=code)

|

print('[-]Config.ini read failed! Please use the offical file!')

|

||||||

# print("literals=\\", file=code)

|

|

||||||

# print("", file=code)

|

def ReadMediaWarehouse():

|

||||||

# print("[movie_location]", file=code)

|

return config['media']['media_warehouse']

|

||||||

# print("path=", file=code)

|

|

||||||

# print("", file=code)

|

def UpdateCheckSwitch():

|

||||||

# print('.',end='')

|

check=str(config['update']['update_check'])

|

||||||

# time.sleep(2)

|

if check == '1':

|

||||||

# print('.')

|

return '1'

|

||||||

# print('[+]config.ini: created!')

|

elif check == '0':

|

||||||

# print('[+]Please restart the program!')

|

return '0'

|

||||||

# time.sleep(4)

|

elif check == '':

|

||||||

# os._exit(0)

|

return '0'

|

||||||

# try:

|

def get_html(url,cookies = None):#网页请求核心

|

||||||

# config.read(config_file, encoding='UTF-8')

|

try:

|

||||||

# except:

|

proxy = config['proxy']['proxy']

|

||||||

# print('[-]Config.ini read failed! Please use the offical file!')

|

timeout = int(config['proxy']['timeout'])

|

||||||

|

retry_count = int(config['proxy']['retry'])

|

||||||

config = ConfigApp()

|

except:

|

||||||

|

print('[-]Proxy config error! Please check the config.')

|

||||||

|

i = 0

|

||||||

def get_network_settings():

|

while i < retry_count:

|

||||||

try:

|

try:

|

||||||

proxy = config.proxy

|

if not str(config['proxy']['proxy']) == '':

|

||||||

timeout = int(config.timeout)

|

proxies = {"http": "http://" + proxy,"https": "https://" + proxy}

|

||||||

retry_count = int(config.retry)

|

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3100.0 Safari/537.36'}

|

||||||

assert timeout > 0

|

getweb = requests.get(str(url), headers=headers, timeout=timeout,proxies=proxies, cookies=cookies)

|

||||||

assert retry_count > 0

|

getweb.encoding = 'utf-8'

|

||||||

except:

|

return getweb.text

|

||||||

raise ValueError("[-]Proxy config error! Please check the config.")

|

else:

|

||||||

return proxy, timeout, retry_count

|

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

|

||||||

|

getweb = requests.get(str(url), headers=headers, timeout=timeout, cookies=cookies)

|

||||||

def getDataState(json_data): # 元数据获取失败检测

|

getweb.encoding = 'utf-8'

|

||||||

if json_data['title'] == '' or json_data['title'] == 'None' or json_data['title'] == 'null':

|

return getweb.text

|

||||||

return 0

|

except requests.exceptions.RequestException:

|

||||||

else:

|

i += 1

|

||||||

return 1

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

except requests.exceptions.ConnectionError:

|

||||||

def ReadMediaWarehouse():

|

i += 1

|

||||||

return config.media_server

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

except requests.exceptions.ProxyError:

|

||||||

def UpdateCheckSwitch():

|

i += 1

|

||||||

check=str(config.update_check)

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

if check == '1':

|

except requests.exceptions.ConnectTimeout:

|

||||||

return '1'

|

i += 1

|

||||||

elif check == '0':

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

return '0'

|

print('[-]Connect Failed! Please check your Proxy or Network!')

|

||||||

elif check == '':

|

|

||||||

return '0'

|

|

||||||

|

|

||||||

def getXpathSingle(htmlcode,xpath):

|

|

||||||

html = etree.fromstring(htmlcode, etree.HTMLParser())

|

|

||||||

result1 = str(html.xpath(xpath)).strip(" ['']")

|

|

||||||

return result1

|

|

||||||

|

|

||||||

def get_html(url,cookies = None):#网页请求核心

|

|

||||||

proxy, timeout, retry_count = get_network_settings()

|

|

||||||

i = 0

|

|

||||||

print(url)

|

|

||||||

while i < retry_count:

|

|

||||||

try:

|

|

||||||

if not proxy == '':

|

|

||||||

proxies = {"http": proxy, "https": proxy}

|

|

||||||

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3100.0 Safari/537.36'}

|

|

||||||

getweb = requests.get(str(url), headers=headers, timeout=timeout, proxies=proxies, cookies=cookies)

|

|

||||||

getweb.encoding = 'utf-8'

|

|

||||||

return getweb.text

|

|

||||||

else:

|

|

||||||

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

|

|

||||||

getweb = requests.get(str(url), headers=headers, timeout=timeout, cookies=cookies)

|

|

||||||

getweb.encoding = 'utf-8'

|

|

||||||

return getweb.text

|

|

||||||

except Exception as e:

|

|

||||||

print(e)

|

|

||||||

i += 1

|

|

||||||

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

|

||||||

print('[-]Connect Failed! Please check your Proxy or Network!')

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -1,416 +1,153 @@

|

|||||||

#!/usr/bin/env python3

|

#!/usr/bin/env python3

|

||||||

# -*- coding: utf-8 -*-

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

import glob

|

import glob

|

||||||

import os

|

import os

|

||||||

import time

|

import time

|

||||||

import fuckit

|

import re

|

||||||

from tenacity import retry, stop_after_delay, wait_fixed

|

import sys

|

||||||

import json

|

from ADC_function import *

|

||||||

import shutil

|

import json

|

||||||

import itertools

|

import shutil

|

||||||

import argparse

|

from configparser import ConfigParser

|

||||||

from pathlib import Path

|

os.chdir(os.getcwd())

|

||||||

|

|

||||||

from core import *

|

# ============global var===========

|

||||||

from ConfigApp import ConfigApp

|

|

||||||

from PathNameProcessor import PathNameProcessor

|

version='1.3'

|

||||||

|

|

||||||

# TODO 封装聚合解耦:CORE

|

config = ConfigParser()

|

||||||

# TODO (学习)统一依赖管理工具

|

config.read(config_file, encoding='UTF-8')

|

||||||

# TODO 不同媒体服务器尽量兼容统一一种元数据 如nfo 海报等(emby,jellyfin,plex)

|

|

||||||

# TODO 字幕整理功能 文件夹中读取所有字幕 并提番号放入对应缓存文件夹中TEMP

|

Platform = sys.platform

|

||||||

|

|

||||||

config = ConfigApp()

|

# ==========global var end=========

|

||||||

|

|

||||||

|

def UpdateCheck():

|

||||||

def safe_list_get(list_in, idx, default=None):

|

if UpdateCheckSwitch() == '1':

|

||||||

"""

|

html2 = get_html('https://raw.githubusercontent.com/yoshiko2/AV_Data_Capture/master/update_check.json')

|

||||||

数组安全取值

|

html = json.loads(str(html2))

|

||||||

:param list_in:

|

|

||||||

:param idx:

|

if not version == html['version']:

|

||||||

:param default:

|

print('[*] * New update ' + html['version'] + ' *')

|

||||||

:return:

|

print('[*] * Download *')

|

||||||

"""

|

print('[*] ' + html['download'])

|

||||||

try:

|

print('[*]=====================================')

|

||||||

return list_in[idx]

|

else:

|

||||||

except IndexError:

|

print('[+]Update Check disabled!')

|

||||||

return default

|

def movie_lists():

|

||||||

|

global exclude_directory_1

|

||||||

|

global exclude_directory_2

|

||||||

def UpdateCheck(version):

|

directory = config['directory_capture']['directory']

|

||||||

if UpdateCheckSwitch() == '1':

|

total=[]

|

||||||

html2 = get_html('https://raw.githubusercontent.com/yoshiko2/AV_Data_Capture/master/update_check.json')

|

file_type = ['mp4','avi','rmvb','wmv','mov','mkv','flv','ts']

|

||||||

html = json.loads(str(html2))

|

exclude_directory_1 = config['common']['failed_output_folder']

|

||||||

|

exclude_directory_2 = config['common']['success_output_folder']

|

||||||

if not version == html['version']:

|

if directory=='*':

|

||||||

print('[*] * New update ' + html['version'] + ' *')

|

remove_total = []

|

||||||

print('[*] ↓ Download ↓')

|

for o in file_type:

|

||||||

print('[*] ' + html['download'])

|

remove_total += glob.glob(r"./" + exclude_directory_1 + "/*." + o)

|

||||||

print('[*]======================================================')

|

remove_total += glob.glob(r"./" + exclude_directory_2 + "/*." + o)

|

||||||

else:

|

for i in os.listdir(os.getcwd()):

|

||||||

print('[+]Update Check disabled!')

|

for a in file_type:

|

||||||

|

total += glob.glob(r"./" + i + "/*." + a)

|

||||||

|

for b in remove_total:

|

||||||

def argparse_get_file():

|

total.remove(b)

|

||||||

parser = argparse.ArgumentParser()

|

return total

|

||||||

parser.add_argument("file", default='', nargs='?', help="Write the file path on here")

|

for a in file_type:

|

||||||

args = parser.parse_args()

|

total += glob.glob(r"./" + directory + "/*." + a)

|

||||||

if args.file == '':

|

return total

|

||||||

return ''

|

def CreatFailedFolder():

|

||||||

else:

|

if not os.path.exists('failed/'): # 新建failed文件夹

|

||||||

return args.file

|

try:

|

||||||

|

os.makedirs('failed/')

|

||||||

|

except:

|

||||||

def movie_lists(escape_folders):

|

print("[-]failed!can not be make folder 'failed'\n[-](Please run as Administrator)")

|

||||||

escape_folders = re.split('[,,]', escape_folders)

|

os._exit(0)

|

||||||

total = []

|

def lists_from_test(custom_nuber): #电影列表

|

||||||

|

a=[]

|

||||||

for root, dirs, files in os.walk(config.search_folder):

|

a.append(custom_nuber)

|

||||||

if root in escape_folders:

|

return a

|

||||||

continue

|

def CEF(path):

|

||||||

for file in files:

|

try:

|

||||||

if re.search(PathNameProcessor.pattern_of_file_name_suffixes, file, re.IGNORECASE):

|

files = os.listdir(path) # 获取路径下的子文件(夹)列表

|

||||||

path = os.path.join(root, file)

|

for file in files:

|

||||||

total.append(path)

|

os.removedirs(path + '/' + file) # 删除这个空文件夹

|

||||||

return total

|

print('[+]Deleting empty folder', path + '/' + file)

|

||||||

|

except:

|

||||||

|

a=''

|

||||||

# def CEF(path):

|

def rreplace(self, old, new, *max):

|

||||||

# try:

|

#从右开始替换文件名中内容,源字符串,将被替换的子字符串, 新字符串,用于替换old子字符串,可选字符串, 替换不超过 max 次

|

||||||

# files = os.listdir(path) # 获取路径下的子文件(夹)列表

|

count = len(self)

|

||||||

# for file in files:

|

if max and str(max[0]).isdigit():

|

||||||

# os.removedirs(path + '/' + file) # 删除这个空文件夹

|

count = max[0]

|

||||||

# print('[+]Deleting empty folder', path + '/' + file)

|

return new.join(self.rsplit(old, count))

|

||||||

# except:

|

def getNumber(filepath):

|

||||||

# a = ''

|

filepath = filepath.replace('.\\','')

|

||||||

#

|

try: # 普通提取番号 主要处理包含减号-的番号

|

||||||

|

filepath = filepath.replace("_", "-")

|

||||||

|

filepath.strip('22-sht.me').strip('-HD').strip('-hd')

|

||||||

def get_numbers(paths):

|

filename = str(re.sub("\[\d{4}-\d{1,2}-\d{1,2}\] - ", "", filepath)) # 去除文件名中时间

|

||||||

"""提取对应路径的番号+集数"""

|

try:

|

||||||

|

file_number = re.search('\w+-\d+', filename).group()

|

||||||

def get_number(filepath, absolute_path=False):

|

except: # 提取类似mkbd-s120番号

|

||||||

"""

|

file_number = re.search('\w+-\w+\d+', filename).group()

|

||||||

获取番号,集数

|

return file_number

|

||||||

:param filepath:

|

except: # 提取不含减号-的番号

|

||||||

:param absolute_path:

|

try:

|

||||||

:return:

|

filename = str(re.sub("ts6\d", "", filepath)).strip('Tokyo-hot').strip('tokyo-hot')

|

||||||

"""

|

filename = str(re.sub(".*?\.com-\d+", "", filename)).replace('_', '')

|

||||||

name = filepath.upper() # 转大写

|

file_number = str(re.search('\w+\d{4}', filename).group(0))

|

||||||

if absolute_path:

|

return file_number

|

||||||

name = name.replace('\\', '/')

|

except: # 提取无减号番号

|

||||||

# 移除干扰字段

|

filename = str(re.sub("ts6\d", "", filepath)) # 去除ts64/265

|

||||||

name = PathNameProcessor.remove_distractions(name)

|

filename = str(re.sub(".*?\.com-\d+", "", filename))

|

||||||

# 抽取 文件路径中可能存在的尾部集数,和抽取尾部集数的后的文件路径

|

file_number = str(re.match('\w+', filename).group())

|

||||||

suffix_episode, name = PathNameProcessor.extract_suffix_episode(name)

|

file_number = str(file_number.replace(re.match("^[A-Za-z]+", file_number).group(),re.match("^[A-Za-z]+", file_number).group() + '-'))

|

||||||

# 抽取 文件路径中可能存在的 番号后跟随的集数 和 处理后番号

|

return file_number

|

||||||

episode_behind_code, code_number = PathNameProcessor.extract_code(name)

|

|

||||||

# 无番号 则设置空字符

|

def RunCore():

|

||||||

code_number = code_number if code_number else ''

|

if Platform == 'win32':

|

||||||

# 优先取尾部集数,无则取番号后的集数(几率低),都无则为空字符

|

if os.path.exists('core.py'):

|

||||||

episode = suffix_episode if suffix_episode else episode_behind_code if episode_behind_code else ''

|

os.system('python core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

elif os.path.exists('core.exe'):

|

||||||

return code_number, episode

|

os.system('core.exe' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从exe启动(用于EXE版程序)

|

||||||

|

elif os.path.exists('core.py') and os.path.exists('core.exe'):

|

||||||

maps = {}

|

os.system('python core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

for path in paths:

|

else:

|

||||||

number, episode = get_number(path)

|

if os.path.exists('core.py'):

|

||||||

maps[path] = (number, episode)

|

os.system('python3 core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

elif os.path.exists('core.exe'):

|

||||||

return maps

|

os.system('core.exe' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从exe启动(用于EXE版程序)

|

||||||

|

elif os.path.exists('core.py') and os.path.exists('core.exe'):

|

||||||

|

os.system('python3 core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

def create_folder(paths):

|

|

||||||

for path_to_make in paths:

|

if __name__ =='__main__':

|

||||||

if path_to_make:

|

print('[*]===========AV Data Capture===========')

|

||||||

try:

|

print('[*] Version '+version)

|

||||||

os.makedirs(path_to_make)

|

print('[*]=====================================')

|

||||||

except FileExistsError as e:

|

CreatFailedFolder()

|

||||||

# name = f'{folder=}'.split('=')[0].split('.')[-1]

|

UpdateCheck()

|

||||||

print(path_to_make + " 已经存在")

|

os.chdir(os.getcwd())

|

||||||

pass

|

|

||||||

except Exception as exception:

|

count = 0

|

||||||

print('! 创建文件夹 ' + path_to_make + ' 失败,文件夹路径错误或权限不够')

|

count_all = str(len(movie_lists()))

|

||||||

raise exception

|

print('[+]Find',str(len(movie_lists())),'movies')

|

||||||

else:

|

for i in movie_lists(): #遍历电影列表 交给core处理

|

||||||

raise Exception('!创建的文件夹路径为空,请确认')

|

count = count + 1

|

||||||

|

percentage = str(count/int(count_all)*100)[:4]+'%'

|

||||||

|

print('[!] - '+percentage+' ['+str(count)+'/'+count_all+'] -')

|

||||||

if __name__ == '__main__':

|

try:

|

||||||

version = '2.8.2'

|

print("[!]Making Data for [" + i + "], the number is [" + getNumber(i) + "]")

|

||||||

|

RunCore()

|

||||||

print('[*]================== AV Data Capture ===================')

|

print("[*]=====================================")

|

||||||

print('[*] Version ' + version)

|

except: # 番号提取异常

|

||||||

print('[*]======================================================')

|

print('[-]' + i + ' Cannot catch the number :')

|

||||||

|

print('[-]Move ' + i + ' to failed folder')

|

||||||

# UpdateCheck(version)

|

shutil.move(i, str(os.getcwd()) + '/' + 'failed/')

|

||||||

|

continue

|

||||||

CreatFailedFolder(config.failed_folder)

|

|

||||||

os.chdir(os.getcwd())

|

CEF(exclude_directory_1)

|

||||||

|

CEF(exclude_directory_2)

|

||||||

# 创建文件夹

|

print("[+]All finished!!!")

|

||||||

create_folder([config.failed_folder, config.search_folder, config.temp_folder])

|

input("[+][+]Press enter key exit, you can check the error messge before you exit.\n[+][+]按回车键结束,你可以在结束之前查看和错误信息。")

|

||||||

|

|

||||||

# temp 文件夹中infos放 番号json信息,pics中放图片信息

|

|

||||||

path_infos = config.temp_folder + '/infos'

|

|

||||||

path_pics = config.temp_folder + '/pics'

|

|

||||||

|

|

||||||

create_folder([path_infos, path_pics])

|

|

||||||

|

|

||||||

# 遍历搜索目录下所有视频的路径

|

|

||||||

movie_list = movie_lists(config.escape_folder)

|

|

||||||

|

|

||||||

# 以下是从文本中提取测试的数据

|

|

||||||

# f = open('TestPathNFO.txt', 'r')

|

|

||||||

# f = open('TestPathSpecial.txt', 'r')

|

|

||||||

# movie_list = [line[:-1] for line in f.readlines()]

|

|

||||||

# f.close()

|

|

||||||

|

|

||||||

# 获取 番号,集数,路径 的字典->list

|

|

||||||

code_ep_paths = [[codeEposode[0], codeEposode[1], path] for path, codeEposode in get_numbers(movie_list).items()]

|

|

||||||

[print(i) for i in code_ep_paths]

|

|

||||||

# 按番号分组片子列表(重点),用于寻找相同番号的片子

|

|

||||||

'''

|

|

||||||

这里利用pandas分组 "https://pandas.pydata.org/pandas-docs/stable/user_guide/groupby.html"

|

|

||||||

|

|

||||||

'''

|

|

||||||

# # 设置打印时显示所有列

|

|

||||||

# pd.set_option('display.max_columns', None)

|

|

||||||

# # 显示所有行

|

|

||||||

# pd.set_option('display.max_rows', None)

|

|

||||||

# # 设置value的显示长度为100,默认为50

|

|

||||||

# pd.set_option('max_colwidth', 30)

|

|

||||||

# # 创建框架

|

|

||||||

# df = pd.DataFrame(code_ep_paths, columns=('code', 'ep', 'path'))

|

|

||||||

# # 以番号分组

|

|

||||||

# groupedCode_code_ep_paths = df.groupby(['code'])

|

|

||||||

# # print(df.groupby(['code', 'ep']).describe().unstack())

|

|

||||||

# grouped_code_ep = df.groupby(['code', 'ep'])['path']

|

|

||||||

#

|

|

||||||

sorted_code_list = sorted(code_ep_paths, key=lambda code_ep_path: code_ep_path[0])

|

|

||||||

group_code_list = itertools.groupby(sorted_code_list, key=lambda code_ep_path: code_ep_path[0])

|

|

||||||

|

|

||||||

|

|

||||||

def group_code_list_to_dict(group_code_list):

|

|

||||||

data_dict = {}

|

|

||||||

for code, code_ep_path_group in group_code_list:

|

|

||||||

code_ep_path_list = list(code_ep_path_group)

|

|

||||||

eps_of_code = {}

|

|

||||||

group_ep_list = itertools.groupby(code_ep_path_list, key=lambda code_ep_path: code_ep_path[1])

|

|

||||||

for ep, group_ep_group in group_ep_list:

|

|

||||||

group_ep_list = list(group_ep_group)

|

|

||||||

eps_of_code[ep] = [code_ep_path[2] for code_ep_path in group_ep_list]

|

|

||||||

data_dict[code] = eps_of_code

|

|

||||||

|

|

||||||

return data_dict

|

|

||||||

|

|

||||||

|

|

||||||

def print_same_code_ep_path(data_dict_in):

|

|

||||||

for code_in in data_dict_in:

|

|

||||||

ep_path_list = data_dict_in[code_in]

|

|

||||||

if len(ep_path_list) > 1:

|

|

||||||

print('--' * 60)

|

|

||||||

print("|" + (code_in if code_in else 'unknown') + ":")

|

|

||||||

|

|

||||||

# group_ep_list = itertools.groupby(code_ep_path_list.items(), key=lambda code_ep_path: code_ep_path[0])

|

|

||||||

for ep in ep_path_list:

|

|

||||||

path_list = ep_path_list[ep]

|

|

||||||

print('--' * 12)

|

|

||||||

ep = ep if ep else ' '

|

|

||||||

if len(path_list) == 1:

|

|

||||||

print('| 集数:' + ep + ' 文件: ' + path_list[0])

|

|

||||||

else:

|

|

||||||

print('| 集数:' + ep + ' 文件: ')

|

|

||||||

for path in path_list:

|

|

||||||

print('| ' + path)

|

|

||||||

|

|

||||||

else:

|

|

||||||

pass

|

|

||||||

|

|

||||||

|

|

||||||

# 分好组的数据 {code:{ep:[path]}}

|

|

||||||

data_dict_groupby_code_ep = group_code_list_to_dict(group_code_list)

|

|

||||||

|

|

||||||

print('--' * 100)

|

|

||||||

print("找到影片数量:" + str(len(movie_list)))

|

|

||||||

print("合计番号数量:" + str(len(data_dict_groupby_code_ep)) + " (多个相同番号的影片只统计一个,不能识别的番号 都统一为'unknown')")

|

|

||||||

print('Warning:!!!! 以下为相同番号的电影明细')

|

|

||||||

print('◤' + '--' * 80)

|

|

||||||

print_same_code_ep_path(data_dict_groupby_code_ep)

|

|

||||||

print('◣' + '--' * 80)

|

|

||||||

|

|

||||||

isContinue = input('任意键继续? N 退出 \n')

|

|

||||||

if isContinue.strip(' ') == "N":

|

|

||||||

exit(1)

|

|

||||||

|

|

||||||

|

|

||||||

# ========== 野鸡番号拖动 ==========

|

|

||||||

# number_argparse = argparse_get_file()

|

|

||||||

# if not number_argparse == '':

|

|

||||||

# print("[!]Making Data for [" + number_argparse + "], the number is [" + getNumber(number_argparse,

|

|

||||||

# absolute_path=True) + "]")

|

|

||||||

# nfo = core_main(number_argparse, getNumber(number_argparse, absolute_path=True))

|

|

||||||

# print("[*]======================================================")

|

|

||||||

# CEF(config.success_folder)

|

|

||||||

# CEF(config.failed_folder)

|

|

||||||

# print("[+]All finished!!!")

|

|

||||||

# input("[+][+]Press enter key exit, you can check the error messge before you exit.")

|

|

||||||

# os._exit(0)

|

|

||||||

# ========== 野鸡番号拖动 ==========

|

|

||||||

|

|

||||||

def download_code_infos(code_list, is_read_cache=True):

|

|

||||||

"""

|

|

||||||

遍历按番号分组的集合,刮取番号信息并缓存

|

|

||||||

|

|

||||||

:param is_read_cache: 是否读取缓存数据

|

|

||||||

:param code_list:

|

|

||||||

:return: {code:nfo}

|

|

||||||

"""

|

|

||||||

count_all_grouped = len(code_list)

|

|

||||||

count = 0

|

|

||||||

code_info_dict = {}

|

|

||||||

|

|

||||||

for code in code_list:

|

|

||||||

count = count + 1

|

|

||||||

percentage = str(count / int(count_all_grouped) * 100)[:4] + '%'

|

|

||||||

print('[!] - ' + percentage + ' [' + str(count) + '/' + str(count_all_grouped) + '] -')

|

|

||||||

try:

|

|

||||||

print("[!]搜刮数据 [" + code + "]")

|

|

||||||

if code:

|

|

||||||

# 创建番号的文件夹

|

|

||||||

file_path = path_infos + '/' + code + '.json'

|

|

||||||

nfo = {}

|

|

||||||

# 读取缓存信息,如果没有则联网搜刮

|

|

||||||

|

|

||||||

path = Path(file_path)

|

|

||||||

if is_read_cache and (path.exists() and path.is_file() and path.stat().st_size > 0):

|

|

||||||

print('找到缓存信息')

|

|

||||||

with open(file_path) as fp:

|

|

||||||

nfo = json.load(fp)

|

|

||||||

else:

|

|

||||||

|

|

||||||

# 核心功能 - 联网抓取信息字典

|

|

||||||

print('联网搜刮')

|

|

||||||

nfo = core_main(code)

|

|

||||||

print('正在写入', end='')

|

|

||||||

|

|

||||||

# 把缓存信息写入缓存文件夹中,有时会设备占用而失败,重试即可

|

|

||||||

@retry(stop=stop_after_delay(3), wait=wait_fixed(2))

|

|

||||||

def read_file():

|

|

||||||

with open(file_path, 'w') as fp:

|

|

||||||

json.dump(nfo, fp)

|

|

||||||

|

|

||||||

read_file()

|

|

||||||

print('完成!')

|

|

||||||

# 将番号信息放入字典

|

|

||||||

code_info_dict[code] = nfo

|

|

||||||

print("[*]======================================================")

|

|

||||||

|

|

||||||

except Exception as e: # 番号的信息获取失败

|

|

||||||

code_info_dict[code] = ''

|

|

||||||

print("找不到信息:" + code + ',Reason:' + str(e))

|

|

||||||

|

|

||||||

# if config.soft_link:

|

|

||||||

# print('[-]Link', file_path_name, 'to failed folder')

|

|

||||||

# os.symlink(file_path_name, config.failed_folder + '/')

|

|

||||||

# else:

|

|

||||||

# try:

|

|

||||||

# print('[-]Move ' + file_path_name + ' to failed folder:' + config.failed_folder)

|

|

||||||

# shutil.move(file_path_name, config.failed_folder + '/')

|

|

||||||

# except FileExistsError:

|

|

||||||

# print('[!]File exists in failed!')

|

|

||||||

# except:

|

|

||||||

# print('[+]skip')

|

|

||||||

continue

|

|

||||||

return code_info_dict

|

|

||||||

|

|

||||||

|

|

||||||

print('----------------------------------')

|

|

||||||

code_infos = download_code_infos(data_dict_groupby_code_ep)

|

|

||||||

print("----未找到番号数据的番号----")

|

|

||||||

print([print(code) for code in code_infos if code_infos[code] == ''])

|

|

||||||

print("-------------------------")

|

|

||||||

|

|

||||||

|

|

||||||

def download_images_of_nfos(code_info_dict):

|

|

||||||

"""

|

|

||||||

遍历番号信息,下载番号电影的海报,图片

|

|

||||||

:param code_info_dict:

|

|

||||||

:return: 无图片的信息的番号

|

|

||||||

"""

|

|

||||||

|

|

||||||

code_list_empty_image = []

|

|

||||||

for code in code_info_dict:

|

|

||||||

nfo = code_info_dict[code]

|

|

||||||

if len(nfo.keys()) == 0:

|

|

||||||

code_list_empty_image.append(code)

|

|

||||||

continue

|

|

||||||

|

|

||||||

code_pics_folder_to_save = path_pics + '/' + code

|

|

||||||

# 1 创建 番号文件夹

|

|

||||||

os.makedirs(code_pics_folder_to_save, exist_ok=True)

|

|

||||||

# 下载缩略图

|

|

||||||

if nfo['imagecut'] == 3: # 3 是缩略图

|

|

||||||

path = Path(code_pics_folder_to_save + '/' + 'thumb.png')

|

|

||||||

if path.exists() and path.is_file() and path.stat().st_size > 0:

|

|

||||||

print(code + ':缩略图已有缓存')

|

|

||||||

else:

|

|

||||||

print(code + ':缩略图下载中...')

|

|

||||||

download_file(nfo['cover_small'], code_pics_folder_to_save, 'thumb.png')

|

|

||||||

print(code + ':缩略图下载完成')

|

|

||||||

# 下载海报

|

|

||||||

path = Path(code_pics_folder_to_save + '/' + 'poster.png')

|

|

||||||

if path.exists() and path.is_file() and path.stat().st_size > 0:

|

|

||||||

print(code + ':海报已有缓存')

|

|

||||||

else:

|

|

||||||

print(code + ':海报下载中...')

|

|

||||||

download_file(nfo['cover'], code_pics_folder_to_save, 'poster.png')

|

|

||||||

print(code + ':海报下载完成')

|

|

||||||

return code_list_empty_image

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

code_list_empty = download_images_of_nfos(code_infos)

|

|

||||||

print("----未找到集数的番号----")

|

|

||||||

print([print(code) for code in code_list_empty])

|

|

||||||

print("------搜刮未找到集数的番号------")

|

|

||||||

code_infos_of_no_ep = download_code_infos(code_list_empty, is_read_cache=False)

|

|

||||||

print("----还是未找到番号数据的番号----")

|

|

||||||

print([print(code) for code in code_infos_of_no_ep if code_infos_of_no_ep[code] == ''])

|

|

||||||

print("----------------------")

|

|

||||||

# 开始操作

|

|

||||||

# # 2 创建缩略图海报

|

|

||||||

# if nfo['imagecut'] == 3: # 3 是缩略图

|

|

||||||

# download_cover_file(nfo['cover_small'], code, code_pics_folder_to_save)

|

|

||||||

# # 3 创建图

|

|

||||||

# download_image(nfo['cover'], code, code_pics_folder_to_save)

|

|

||||||

# # 4 剪裁

|

|

||||||

# crop_image(nfo['imagecut'], code, code_pics_folder_to_save)

|

|

||||||

# # 5 背景图

|

|

||||||

# copy_images_to_background_image(code, code_pics_folder_to_save)

|

|

||||||

# 6 创建 mame.nfo(不需要,需要时从infos中josn文件转为nfo文件)

|

|

||||||

# make_nfo_file(nfo, code, temp_path_to_save)

|

|

||||||

# 相同番号处理:按集数添加-CD[X];视频格式 and 大小 分;

|

|

||||||

# TODO 方式1 刮削:添加nfo,封面,内容截图等

|

|

||||||

# 6 创建 mame.nfo(不需要,需要时从infos中josn文件转为nfo文件)

|

|

||||||

make_nfo_file(nfo, code, temp_path_to_save)

|

|

||||||

# TODO 方式2 整理:按规则移动影片,字幕 到 演员,发行商,有无🐎 等

|

|

||||||

|

|

||||||

# if config.program_mode == '1':

|

|

||||||

# if multi_part == 1:

|

|

||||||

# number += part # 这时number会被附加上CD1后缀

|

|

||||||

# smallCoverCheck(path, number, imagecut, json_data['cover_small'], c_word, option, filepath, config.failed_folder) # 检查小封面

|

|

||||||

# imageDownload(option, json_data['cover'], number, c_word, path, multi_part, filepath, config.failed_folder) # creatFoder会返回番号路径

|

|

||||||

# cutImage(option, imagecut, path, number, c_word) # 裁剪图

|

|

||||||

# copyRenameJpgToBackdrop(option, path, number, c_word)

|

|

||||||

# PrintFiles(option, path, c_word, json_data['naming_rule'], part, cn_sub, json_data, filepath, config.failed_folder, tag) # 打印文件 .nfo

|

|

||||||

# pasteFileToFolder(filepath, path, number, c_word) # 移动文件

|

|

||||||

# # =======================================================================整理模式

|

|

||||||

# elif config.program_mode == '2':

|

|

||||||

# pasteFileToFolder_mode2(filepath, path, multi_part, number, part, c_word) # 移动文件

|

|

||||||

|

|

||||||

# CEF(config.success_folder)

|

|

||||||

# CEF(config.failed_folder)

|

|

||||||

print("[+]All finished!!!")

|

|

||||||

input("[+][+]Press enter key exit, you can check the error message before you exit.")

|

|

||||||

28

ConfigApp.py

@ -1,28 +0,0 @@

|

|||||||

from configparser import ConfigParser

|

|

||||||

|

|

||||||

from MediaServer import MediaServer

|

|

||||||

|

|

||||||

|

|

||||||

class ConfigApp:

|

|

||||||

def __init__(self):

|

|

||||||

config_file = 'config.ini'

|

|

||||||

config = ConfigParser()

|

|

||||||

config.read(config_file, encoding='UTF-8')

|

|

||||||

self.success_folder = config['common']['success_output_folder']

|

|

||||||

self.failed_folder = config['common']['failed_output_folder'] # 失败输出目录

|

|

||||||

self.escape_folder = config['escape']['folders'] # 多级目录刮削需要排除的目录

|

|

||||||

self.search_folder = config['common']['search_folder'] # 搜索路径

|

|

||||||

self.temp_folder = config['common']['temp_folder'] # 临时资源路径

|

|

||||||

self.soft_link = (config['common']['soft_link'] == 1)

|

|

||||||

# self.escape_literals = (config['escape']['literals'] == 1)

|

|

||||||

self.naming_rule = config['Name_Rule']['naming_rule']

|

|

||||||

self.location_rule = config['Name_Rule']['location_rule']

|

|

||||||

|

|

||||||

self.proxy = config['proxy']['proxy']

|

|

||||||

self.timeout = float(config['proxy']['timeout'])

|

|

||||||

self.retry = int(config['proxy']['retry'])

|

|

||||||

self.media_server = MediaServer[config['media']['media_warehouse']]

|

|

||||||

self.update_check = config['update']['update_check']

|

|

||||||

self.debug_mode = config['debug_mode']['switch']

|

|

||||||

|

|

||||||

|

|

||||||

@ -1,19 +0,0 @@

|

|||||||

import pandas as pd

|

|

||||||

import numpy as np

|

|

||||||

|

|

||||||

df = pd.DataFrame({'A': ['foo', 'bar', 'foo', 'bar',

|

|

||||||

'foo', 'bar', 'foo', 'foo'],

|

|

||||||

'B': ['one', 'one', 'two', 'three',

|

|

||||||

'two', 'two', 'one', 'three'],

|

|

||||||

'C': np.random.randn(8),

|

|

||||||

'D': np.random.randn(8)})

|

|

||||||

|

|

||||||

print(df)

|

|

||||||

groupedA = df.groupby('A').describe()

|

|

||||||

groupedAB = df.groupby(['A', 'B'])['C']

|

|

||||||

print('---'*18)

|

|

||||||

for a, b in groupedAB:

|

|

||||||

print('--'*18)

|

|

||||||

print(a)

|

|

||||||

print('-' * 18)

|

|

||||||

print(b)

|

|

||||||

@ -1,38 +0,0 @@

|

|||||||

import pandas as pd

|

|

||||||

import numpy as np

|

|

||||||

|

|

||||||

'''

|

|

||||||

python数据处理三剑客之一pandas

|

|

||||||

https://pandas.pydata.org/pandas-docs/stable/user_guide

|

|

||||||

https://www.pypandas.cn/docs/getting_started/10min.html

|

|

||||||

'''

|

|

||||||

|

|

||||||

dates = pd.date_range('20130101', periods=6)

|

|

||||||

df = pd.DataFrame(np.random.randn(6, 4), index=dates, columns=list('ABCD'))

|

|

||||||

print(dates)

|

|

||||||

print(df)

|

|

||||||

|

|

||||||

df2 = pd.DataFrame({'A': 1.,

|

|

||||||

'B': pd.Timestamp('20130102'),

|

|

||||||

'C': pd.Series(1, index=list(range(4)), dtype='float32'),

|

|

||||||

'D': np.array([3] * 4, dtype='int32'),

|

|

||||||

'E': pd.Categorical(["test", "train", "test", "train"]),

|

|

||||||

'F': 'foo'})

|

|

||||||

print(df2)

|

|

||||||

print(df2.dtypes)

|

|

||||||

print(df.head())

|

|

||||||

print(df.tail(5))

|

|

||||||

print(df.index)

|

|

||||||

print(df.columns)

|

|

||||||

df.describe() # 统计数据摘要

|

|

||||||

df.T # index columns互转

|

|

||||||

df.sort_index(axis=1, ascending=False) # 排序,axis=1 是columns,axis=1 是index

|

|

||||||

df.sort_values(by='B') # 按值排序 按B列中的值排序

|

|

||||||

|

|

||||||

# 切行

|

|

||||||

df.A

|

|

||||||

df['A']

|

|

||||||

# 切行

|

|

||||||

df['20130102':'20130104']

|

|

||||||

df[0:3]

|

|

||||||

|

|

||||||

@ -1,28 +0,0 @@

|

|||||||

from enum import Enum, auto

|

|

||||||

|

|

||||||

|

|

||||||

class MediaServer(Enum):

|

|

||||||

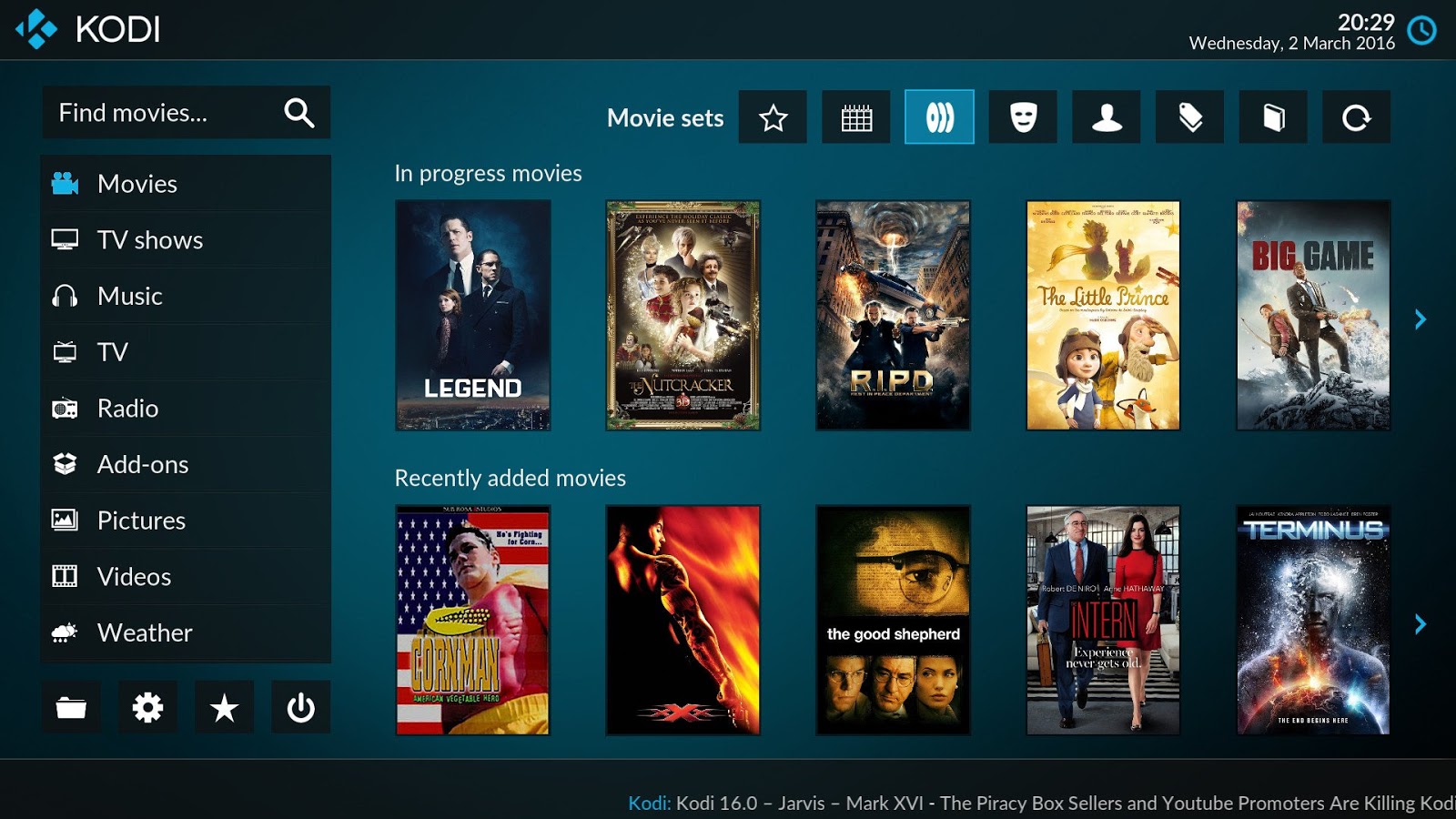

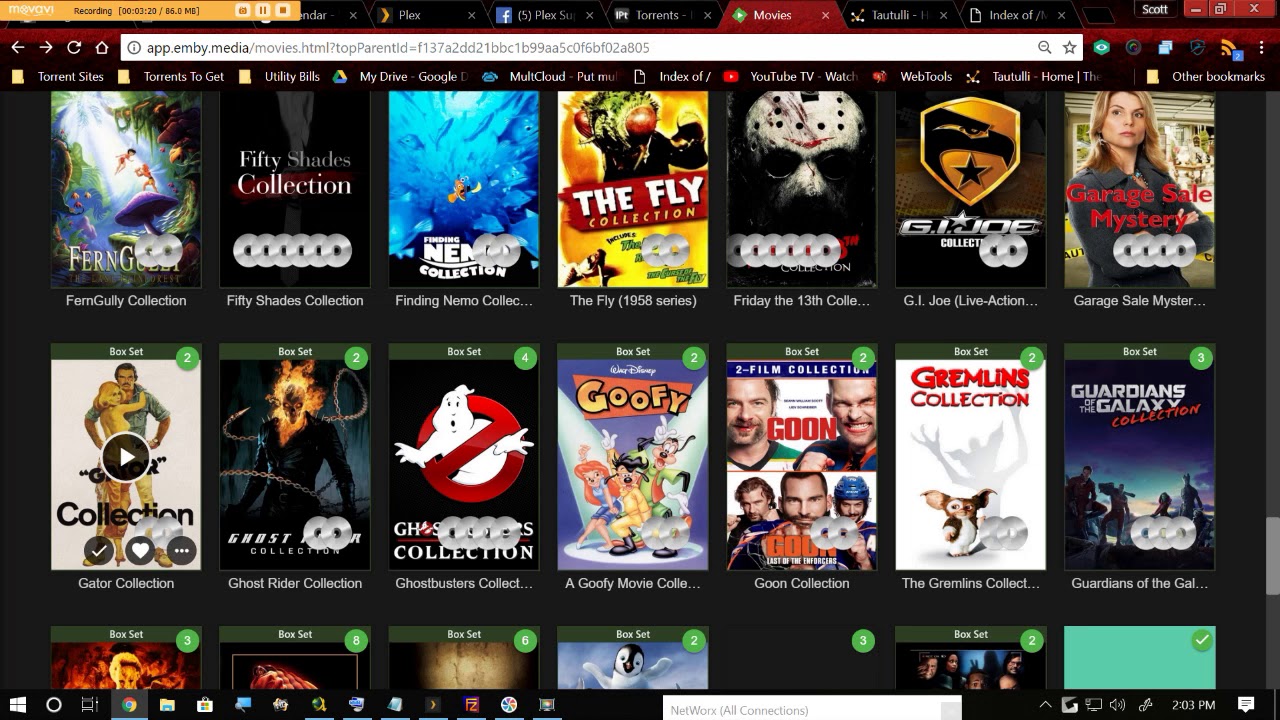

EMBY = auto()

|

|

||||||

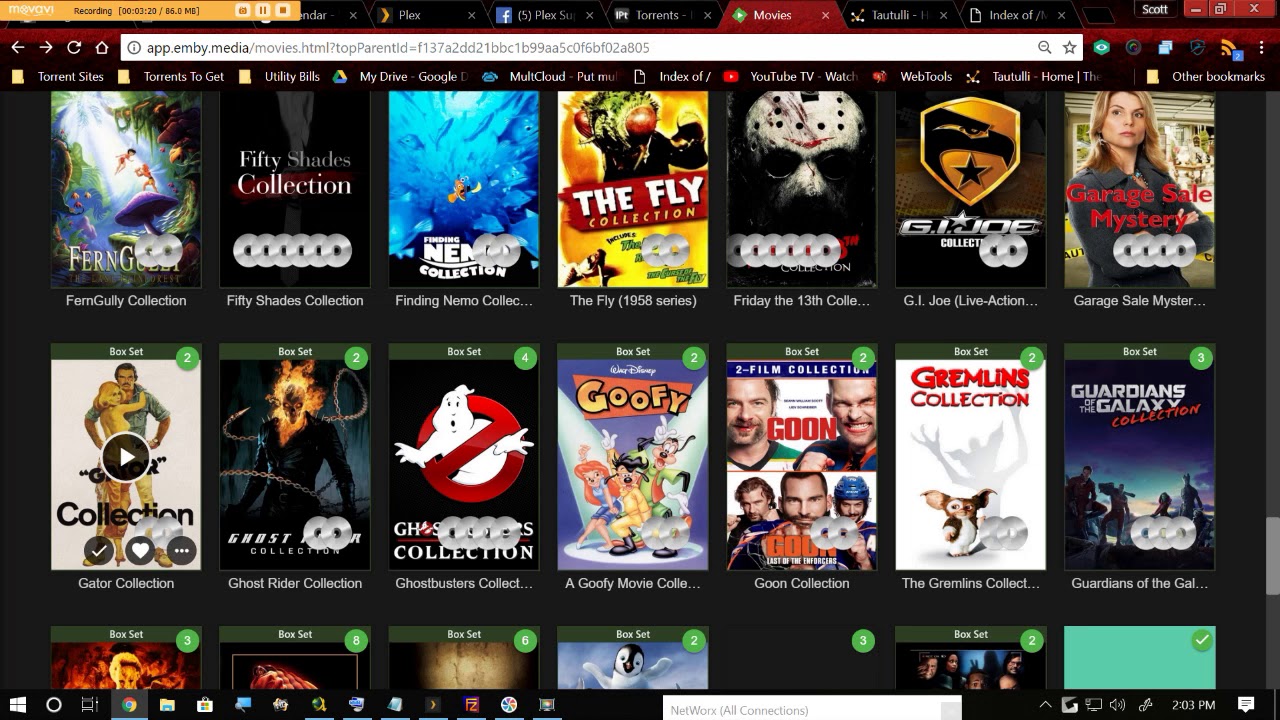

PLEX = auto()

|

|

||||||

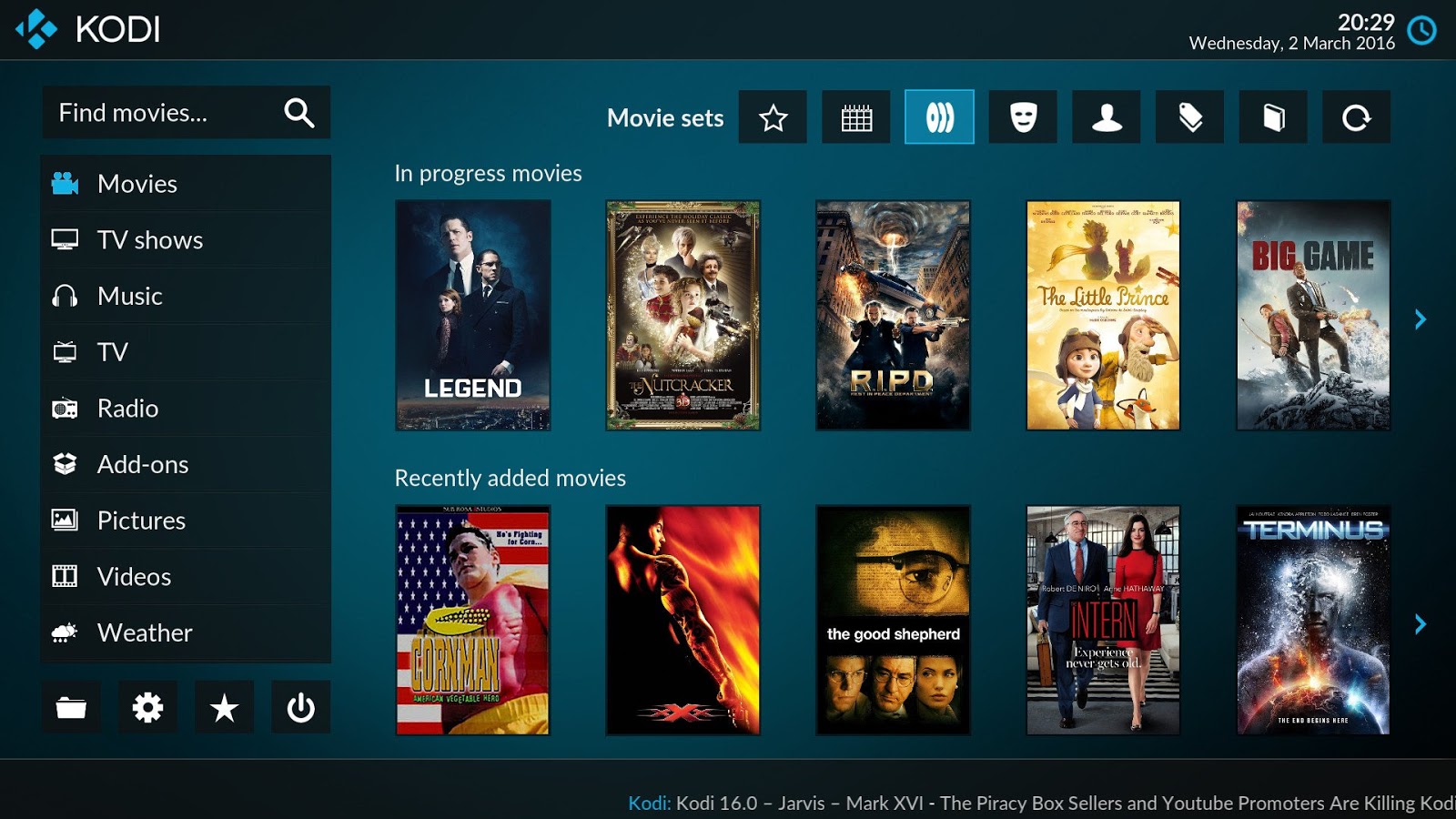

KODI = auto()

|

|

||||||

|

|

||||||

# media = EMBY

|

|

||||||

#

|

|

||||||

# def __init__(self, arg):

|

|

||||||

# self = [e for e in MediaServer if arg.upper() == self.name]

|

|

||||||

|

|

||||||

def poster_name(self, name):

|

|

||||||

if self == MediaServer.EMBY: # 保存[name].png

|

|

||||||

return name + '.png'

|

|

||||||

elif self == MediaServer.KODI: # 保存[name]-poster.jpg

|

|

||||||

return name + '-poster.jpg'

|

|

||||||

elif self == MediaServer.PLEX: # 保存 poster.jpg

|

|

||||||

return 'poster.jpg'

|

|

||||||

|

|

||||||

def image_name(self, name):

|

|

||||||

if self == MediaServer.EMBY: # name.jpg

|

|

||||||

return name + '.jpg'

|

|

||||||

elif self == MediaServer.KODI: # [name]-fanart.jpg

|

|

||||||

return name + '-fanart.jpg'

|

|

||||||

elif self == MediaServer.PLEX: # fanart.jpg

|

|

||||||

return 'fanart.jpg'

|

|

||||||

@ -1,3 +0,0 @@

|

|||||||

from addict import Dict

|

|

||||||

|

|

||||||

# class Metadata:

|

|

||||||

@ -1,115 +0,0 @@

|

|||||||

import re

|

|

||||||

|

|

||||||

import fuckit

|

|

||||||

|

|

||||||

|

|

||||||

class PathNameProcessor:

|

|

||||||

# 类变量

|

|

||||||

pattern_of_file_name_suffixes = r'.(mov|mp4|avi|rmvb|wmv|mov|mkv|flv|ts|m2ts)$'

|

|

||||||

|

|

||||||

# def __init__(self):

|

|

||||||

|

|

||||||

@staticmethod

|

|

||||||

def remove_distractions(origin_name):

|

|

||||||

"""移除干扰项"""

|

|

||||||

# 移除文件类型后缀

|

|

||||||

origin_name = re.sub(PathNameProcessor.pattern_of_file_name_suffixes, '', origin_name, 0, re.IGNORECASE)

|

|

||||||

|

|

||||||

# 处理包含减号-和_的番号'/-070409_621'

|

|

||||||

origin_name = re.sub(r'[-_~*# ]', "-", origin_name, 0)

|

|

||||||

|

|

||||||

origin_name = re.sub(r'(Carib)(bean)?', '-', origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(r'(1pondo)', '-', origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(r'(tokyo)[-. ]?(hot)', '-', origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(r'Uncensored', '-', origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(r'JAV', '-', origin_name, 0, re.IGNORECASE)

|

|

||||||

# 移除干扰字段

|

|

||||||

origin_name = origin_name.replace('22-sht.me', '-')

|

|

||||||

|

|

||||||

# 去除文件名中时间 1970-2099年 月 日

|

|

||||||

pattern_of_date = r'(?:-)(19[789]\d|20\d{2})(-?(0\d|1[012])-?(0[1-9]|[12]\d|3[01])?)?[-.]'

|

|

||||||

# 移除字母开头 清晰度相关度 字符

|

|

||||||

pattern_of_resolution_alphas = r'(?<![a-zA-Z])(SD|((F|U)|(Full|Ultra)[-_*. ~]?)?HD|BD|(blu[-_*. ~]?ray)|[hx]264|[hx]265|HEVC)'

|

|

||||||

# 数字开头的 清晰度相关度 字符

|

|

||||||

pattern_of_resolution_numbers = r'(?<!\d)(4K|(1080[ip])|(720p)|(480p))'

|

|

||||||

origin_name = re.sub(pattern_of_resolution_alphas, "-", origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(pattern_of_resolution_numbers, "-", origin_name, 0, re.IGNORECASE)

|

|

||||||

origin_name = re.sub(pattern_of_date, "-", origin_name)

|

|

||||||

|

|

||||||

if 'FC2' or 'fc2' in origin_name:

|

|

||||||

origin_name = origin_name.replace('-PPV', '').replace('PPV-', '').replace('FC2PPV-', 'FC2-').replace(

|

|

||||||

'FC2PPV_', 'FC2-')

|

|

||||||

|

|

||||||

# 移除连续重复无意义符号-

|

|

||||||

origin_name = re.sub(r"([-.])(\1+)", r"\1", origin_name)

|

|

||||||

# 移除尾部无意义符号 方便识别剧集数

|

|

||||||

origin_name = re.sub(r'[-.]+$', "", origin_name)

|

|

||||||

|

|

||||||

return origin_name

|

|

||||||

|

|

||||||

@staticmethod

|

|

||||||

def extract_suffix_episode(origin_name):

|

|

||||||

""" 提取尾部集数号 123ABC(只识别一位) part1 ,ipz.A , CD1 , NOP019B.HD.wmv"""

|

|

||||||

episode = None

|

|

||||||

with fuckit:

|

|

||||||

# 零宽断言获取尾部数字 剧集数 123

|

|

||||||

pattern_episodes_number = r'(?<!\d)\d$'

|

|

||||||

episode = re.findall(pattern_episodes_number, origin_name)[-1]

|

|

||||||

origin_name = re.sub(pattern_episodes_number, "", origin_name)

|

|

||||||

with fuckit:

|

|

||||||

# 零宽断言获取尾部字幕 剧集数 abc

|

|

||||||

pattern_episodes_alpha = r'(?<![a-zA-Z])[a-zA-Z]$'

|

|

||||||

episode = re.findall(pattern_episodes_alpha, origin_name)[-1]

|

|

||||||

origin_name = re.sub(pattern_episodes_alpha, "", origin_name)

|

|

||||||

return episode, origin_name

|

|

||||||

|

|

||||||

@staticmethod

|

|

||||||

def extract_code(origin_name):

|

|

||||||

"""

|

|

||||||

提取集数和 规范过的番号

|

|

||||||

"""

|

|

||||||

name = None

|

|

||||||

episode = None

|

|

||||||

with fuckit:

|

|

||||||

# 找到含- 或不含-的 番号:1. 数字+数字 2. 字母+数字

|

|

||||||

name = re.findall(r'(?:\d{2,}-\d{2,})|(?:[A-Z]+-?[A-Z]*\d{2,})', origin_name)[-1]

|

|

||||||

episode = PathNameProcessor.extract_episode_behind_code(origin_name, name)

|

|

||||||

# 将未-的名字处理加上 -

|

|

||||||

if not ('-' in name):

|

|

||||||

# 无减号-的番号,尝试分段加上-

|

|

||||||

# 非贪婪匹配非特殊字符,零宽断言后,数字至少2位连续,ipz221.part2 , mide072hhb ,n1180

|

|

||||||

with fuckit:

|

|

||||||

name = re.findall(r'[a-zA-Z]+\d{2,}', name)[-1]

|

|

||||||

# 比如MCDV-47 mcdv-047 是2个不一样的片子,但是 SIVR-00008 和 SIVR-008是同同一部,但是heyzo除外,heyzo 是四位数

|

|

||||||

if "heyzo" not in name.lower():

|

|

||||||

name = re.sub(r'([a-zA-Z]{2,})(?:0*?)(\d{2,})', r'\1-\2', name)

|

|

||||||

|

|

||||||

# 正则取含-的番号 【字母-[字母]数字】,数字必定大于2位 番号的数组的最后的一个元素

|

|

||||||

with fuckit:

|

|

||||||

# MKBD_S03-MaRieS

|

|

||||||

name = re.findall(r'[a-zA-Z|\d]+-[a-zA-Z|\d]*\d{2,}', name)[-1]

|

|

||||||

# 107NTTR-037 -> NTTR-037 , SIVR-00008 -> SIVR-008 ,但是heyzo除外

|

|

||||||

if "heyzo" not in name.lower():

|

|

||||||

searched = re.search(r'([a-zA-Z]{2,})-(?:0*)(\d{3,})', name)

|

|

||||||

if searched:

|

|

||||||

name = '-'.join(searched.groups())

|

|

||||||

|

|

||||||

return episode, name

|

|

||||||

|

|

||||||

@staticmethod

|

|

||||||

def extract_episode_behind_code(origin_name, code):

|

|

||||||

episode = None

|

|

||||||

|

|

||||||

with fuckit:

|

|

||||||

# 零宽断言获取尾部字幕 剧集数 abc123

|

|

||||||

result_dict = re.search(rf'(?<={code})-?((?P<alpha>([A-Z](?![A-Z])))|(?P<num>\d(?!\d)))', origin_name,

|

|

||||||

re.I).groupdict()

|

|

||||||

episode = result_dict['alpha'] or result_dict['num']

|

|

||||||

return episode

|

|

||||||

|

|

||||||

|

|

||||||

def safe_list_get(list_in, idx, default):

|

|

||||||

try:

|

|

||||||

return list_in[idx]

|

|

||||||

except IndexError:

|

|

||||||

return default

|

|

||||||

19

Pipfile

@ -1,19 +0,0 @@

|

|||||||

[[source]]

|

|

||||||

name = "pypi"

|

|

||||||

url = "https://pypi.org/simple"

|

|

||||||

verify_ssl = true

|

|

||||||

|

|

||||||

[dev-packages]

|

|

||||||

|

|

||||||

[packages]

|

|

||||||

bs4 = "*"

|

|

||||||

tenacity = "*"

|

|

||||||

fuckit = "*"

|

|

||||||

requests = "*"

|

|

||||||

image = "*"

|

|

||||||

lazyxml = {editable = true,git = "https://github.com/waynedyck/lazyxml.git",ref = "python-3-conversion_wd1"}

|

|

||||||

lxml = "*"

|

|

||||||

pyquery = "*"

|

|

||||||

|

|

||||||

[requires]

|

|

||||||

python_version = "3.8"

|

|

||||||

246

Pipfile.lock

generated

@ -1,246 +0,0 @@

|

|||||||

{

|

|

||||||

"_meta": {

|

|

||||||

"hash": {

|

|

||||||

"sha256": "15bf3c6af3ec315358a0217481a13285f95fc742bb5db8a1f934e0d1c3d7d5e2"

|

|

||||||

},

|

|

||||||

"pipfile-spec": 6,

|

|

||||||

"requires": {

|

|

||||||

"python_version": "3.8"

|

|

||||||

},

|

|

||||||

"sources": [

|

|

||||||

{

|

|

||||||

"name": "pypi",

|

|

||||||

"url": "https://pypi.org/simple",

|

|

||||||

"verify_ssl": true

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

"default": {

|

|

||||||

"asgiref": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:5ee950735509d04eb673bd7f7120f8fa1c9e2df495394992c73234d526907e17",

|

|

||||||

"sha256:7162a3cb30ab0609f1a4c95938fd73e8604f63bdba516a7f7d64b83ff09478f0"

|

|

||||||

],

|

|

||||||

"markers": "python_version >= '3.5'",

|

|

||||||

"version": "==3.3.1"

|

|

||||||

},

|

|

||||||

"beautifulsoup4": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:4c98143716ef1cb40bf7f39a8e3eec8f8b009509e74904ba3a7b315431577e35",

|

|

||||||

"sha256:84729e322ad1d5b4d25f805bfa05b902dd96450f43842c4e99067d5e1369eb25",

|

|

||||||

"sha256:fff47e031e34ec82bf17e00da8f592fe7de69aeea38be00523c04623c04fb666"

|

|

||||||

],

|

|

||||||

"version": "==4.9.3"

|

|

||||||

},

|

|

||||||

"bs4": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:36ecea1fd7cc5c0c6e4a1ff075df26d50da647b75376626cc186e2212886dd3a"

|

|

||||||

],

|

|

||||||

"index": "pypi",

|

|

||||||

"version": "==0.0.1"

|

|

||||||

},

|

|

||||||

"certifi": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:1a4995114262bffbc2413b159f2a1a480c969de6e6eb13ee966d470af86af59c",

|

|

||||||

"sha256:719a74fb9e33b9bd44cc7f3a8d94bc35e4049deebe19ba7d8e108280cfd59830"

|

|

||||||

],

|

|

||||||

"version": "==2020.12.5"

|

|

||||||

},

|

|

||||||

"chardet": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:0d6f53a15db4120f2b08c94f11e7d93d2c911ee118b6b30a04ec3ee8310179fa",

|

|

||||||

"sha256:f864054d66fd9118f2e67044ac8981a54775ec5b67aed0441892edb553d21da5"

|

|

||||||

],

|

|

||||||

"markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3, 3.4'",

|

|

||||||

"version": "==4.0.0"

|

|

||||||

},

|

|

||||||

"cssselect": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:f612ee47b749c877ebae5bb77035d8f4202c6ad0f0fc1271b3c18ad6c4468ecf",

|

|

||||||

"sha256:f95f8dedd925fd8f54edb3d2dfb44c190d9d18512377d3c1e2388d16126879bc"

|

|

||||||

],

|

|

||||||

"markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

|

|

||||||

"version": "==1.1.0"

|

|

||||||

},

|

|

||||||

"django": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:2d78425ba74c7a1a74b196058b261b9733a8570782f4e2828974777ccca7edf7",

|

|

||||||

"sha256:efa2ab96b33b20c2182db93147a0c3cd7769d418926f9e9f140a60dca7c64ca9"

|

|

||||||

],

|

|

||||||

"markers": "python_version >= '3.6'",

|

|

||||||

"version": "==3.1.5"

|

|

||||||

},

|

|

||||||

"fuckit": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:059488e6aa2053da9db5eb5101e2498f608314da5118bf2385acb864568ccc25"

|

|

||||||

],

|

|

||||||

"index": "pypi",

|

|

||||||

"version": "==4.8.1"

|

|

||||||

},

|

|

||||||

"idna": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:b307872f855b18632ce0c21c5e45be78c0ea7ae4c15c828c20788b26921eb3f6",

|

|

||||||

"sha256:b97d804b1e9b523befed77c48dacec60e6dcb0b5391d57af6a65a312a90648c0"

|

|

||||||

],

|

|

||||||

"markers": "python_version >= '2.7' and python_version not in '3.0, 3.1, 3.2, 3.3'",

|

|

||||||

"version": "==2.10"

|

|

||||||

},

|

|

||||||

"image": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:baa2e09178277daa50f22fd6d1d51ec78f19c12688921cb9ab5808743f097126"

|

|

||||||

],

|

|

||||||

"index": "pypi",

|

|

||||||

"version": "==1.5.33"

|

|

||||||

},

|

|

||||||

"lazyxml": {

|

|

||||||

"editable": true,

|

|

||||||

"git": "https://github.com/waynedyck/lazyxml.git",

|

|

||||||

"ref": "f42ea4a4febf4c1e120b05d6ca9cef42556a75d5"

|

|

||||||

},

|

|

||||||

"lxml": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:0448576c148c129594d890265b1a83b9cd76fd1f0a6a04620753d9a6bcfd0a4d",

|

|

||||||

"sha256:127f76864468d6630e1b453d3ffbbd04b024c674f55cf0a30dc2595137892d37",

|

|

||||||

"sha256:1471cee35eba321827d7d53d104e7b8c593ea3ad376aa2df89533ce8e1b24a01",

|

|

||||||

"sha256:2363c35637d2d9d6f26f60a208819e7eafc4305ce39dc1d5005eccc4593331c2",

|

|

||||||

"sha256:2e5cc908fe43fe1aa299e58046ad66981131a66aea3129aac7770c37f590a644",

|

|

||||||

"sha256:2e6fd1b8acd005bd71e6c94f30c055594bbd0aa02ef51a22bbfa961ab63b2d75",

|

|

||||||

"sha256:366cb750140f221523fa062d641393092813b81e15d0e25d9f7c6025f910ee80",

|

|

||||||

"sha256:42ebca24ba2a21065fb546f3e6bd0c58c3fe9ac298f3a320147029a4850f51a2",

|

|

||||||

"sha256:4e751e77006da34643ab782e4a5cc21ea7b755551db202bc4d3a423b307db780",

|

|

||||||

"sha256:4fb85c447e288df535b17ebdebf0ec1cf3a3f1a8eba7e79169f4f37af43c6b98",

|

|

||||||

"sha256:50c348995b47b5a4e330362cf39fc503b4a43b14a91c34c83b955e1805c8e308",

|

|

||||||

"sha256:535332fe9d00c3cd455bd3dd7d4bacab86e2d564bdf7606079160fa6251caacf",

|

|

||||||

"sha256:535f067002b0fd1a4e5296a8f1bf88193080ff992a195e66964ef2a6cfec5388",

|

|

||||||

"sha256:5be4a2e212bb6aa045e37f7d48e3e1e4b6fd259882ed5a00786f82e8c37ce77d",

|

|

||||||

"sha256:60a20bfc3bd234d54d49c388950195d23a5583d4108e1a1d47c9eef8d8c042b3",

|

|

||||||

"sha256:648914abafe67f11be7d93c1a546068f8eff3c5fa938e1f94509e4a5d682b2d8",

|

|

||||||

"sha256:681d75e1a38a69f1e64ab82fe4b1ed3fd758717bed735fb9aeaa124143f051af",

|

|

||||||

"sha256:68a5d77e440df94011214b7db907ec8f19e439507a70c958f750c18d88f995d2",

|

|

||||||

"sha256:69a63f83e88138ab7642d8f61418cf3180a4d8cd13995df87725cb8b893e950e",

|

|

||||||

"sha256:6e4183800f16f3679076dfa8abf2db3083919d7e30764a069fb66b2b9eff9939",

|

|

||||||

"sha256:6fd8d5903c2e53f49e99359b063df27fdf7acb89a52b6a12494208bf61345a03",

|

|

||||||

"sha256:791394449e98243839fa822a637177dd42a95f4883ad3dec2a0ce6ac99fb0a9d",

|

|

||||||

"sha256:7a7669ff50f41225ca5d6ee0a1ec8413f3a0d8aa2b109f86d540887b7ec0d72a",

|

|

||||||

"sha256:7e9eac1e526386df7c70ef253b792a0a12dd86d833b1d329e038c7a235dfceb5",

|

|

||||||

"sha256:7ee8af0b9f7de635c61cdd5b8534b76c52cd03536f29f51151b377f76e214a1a",

|

|

||||||

"sha256:8246f30ca34dc712ab07e51dc34fea883c00b7ccb0e614651e49da2c49a30711",

|

|

||||||

"sha256:8c88b599e226994ad4db29d93bc149aa1aff3dc3a4355dd5757569ba78632bdf",

|

|

||||||

"sha256:923963e989ffbceaa210ac37afc9b906acebe945d2723e9679b643513837b089",

|

|

||||||

"sha256:94d55bd03d8671686e3f012577d9caa5421a07286dd351dfef64791cf7c6c505",

|

|

||||||

"sha256:97db258793d193c7b62d4e2586c6ed98d51086e93f9a3af2b2034af01450a74b",

|

|

||||||

"sha256:a9d6bc8642e2c67db33f1247a77c53476f3a166e09067c0474facb045756087f",

|

|

||||||

"sha256:cd11c7e8d21af997ee8079037fff88f16fda188a9776eb4b81c7e4c9c0a7d7fc",

|

|

||||||

"sha256:d8d3d4713f0c28bdc6c806a278d998546e8efc3498949e3ace6e117462ac0a5e",

|

|

||||||

"sha256:e0bfe9bb028974a481410432dbe1b182e8191d5d40382e5b8ff39cdd2e5c5931",

|

|

||||||

"sha256:f4822c0660c3754f1a41a655e37cb4dbbc9be3d35b125a37fab6f82d47674ebc",

|

|

||||||

"sha256:f83d281bb2a6217cd806f4cf0ddded436790e66f393e124dfe9731f6b3fb9afe",

|

|

||||||

"sha256:fc37870d6716b137e80d19241d0e2cff7a7643b925dfa49b4c8ebd1295eb506e"

|

|

||||||

],

|

|

||||||

"index": "pypi",

|

|

||||||

"version": "==4.6.2"

|

|

||||||

},

|

|

||||||

"pillow": {

|

|

||||||

"hashes": [

|

|

||||||

"sha256:165c88bc9d8dba670110c689e3cc5c71dbe4bfb984ffa7cbebf1fac9554071d6",

|

|

||||||

"sha256:1d208e670abfeb41b6143537a681299ef86e92d2a3dac299d3cd6830d5c7bded",

|

|

||||||

"sha256:22d070ca2e60c99929ef274cfced04294d2368193e935c5d6febfd8b601bf865",

|

|

||||||

"sha256:2353834b2c49b95e1313fb34edf18fca4d57446675d05298bb694bca4b194174",

|

|

||||||

"sha256:39725acf2d2e9c17356e6835dccebe7a697db55f25a09207e38b835d5e1bc032",

|

|

||||||

"sha256:3de6b2ee4f78c6b3d89d184ade5d8fa68af0848f9b6b6da2b9ab7943ec46971a",

|

|

||||||

"sha256:47c0d93ee9c8b181f353dbead6530b26980fe4f5485aa18be8f1fd3c3cbc685e",

|

|

||||||

"sha256:5e2fe3bb2363b862671eba632537cd3a823847db4d98be95690b7e382f3d6378",

|

|

||||||

"sha256:604815c55fd92e735f9738f65dabf4edc3e79f88541c221d292faec1904a4b17",

|

|

||||||

"sha256:6c5275bd82711cd3dcd0af8ce0bb99113ae8911fc2952805f1d012de7d600a4c",

|

|

||||||

"sha256:731ca5aabe9085160cf68b2dbef95fc1991015bc0a3a6ea46a371ab88f3d0913",

|

|

||||||